What Problem Does This Solve?

In real systems, allowing unlimited requests from a single client can:

- • Overload your server

- • Cause unfair resource usage

- • Enable abuse (DDoS, brute force, scraping)

A rate limiter protects your service by controlling how many requests a client can make over time .

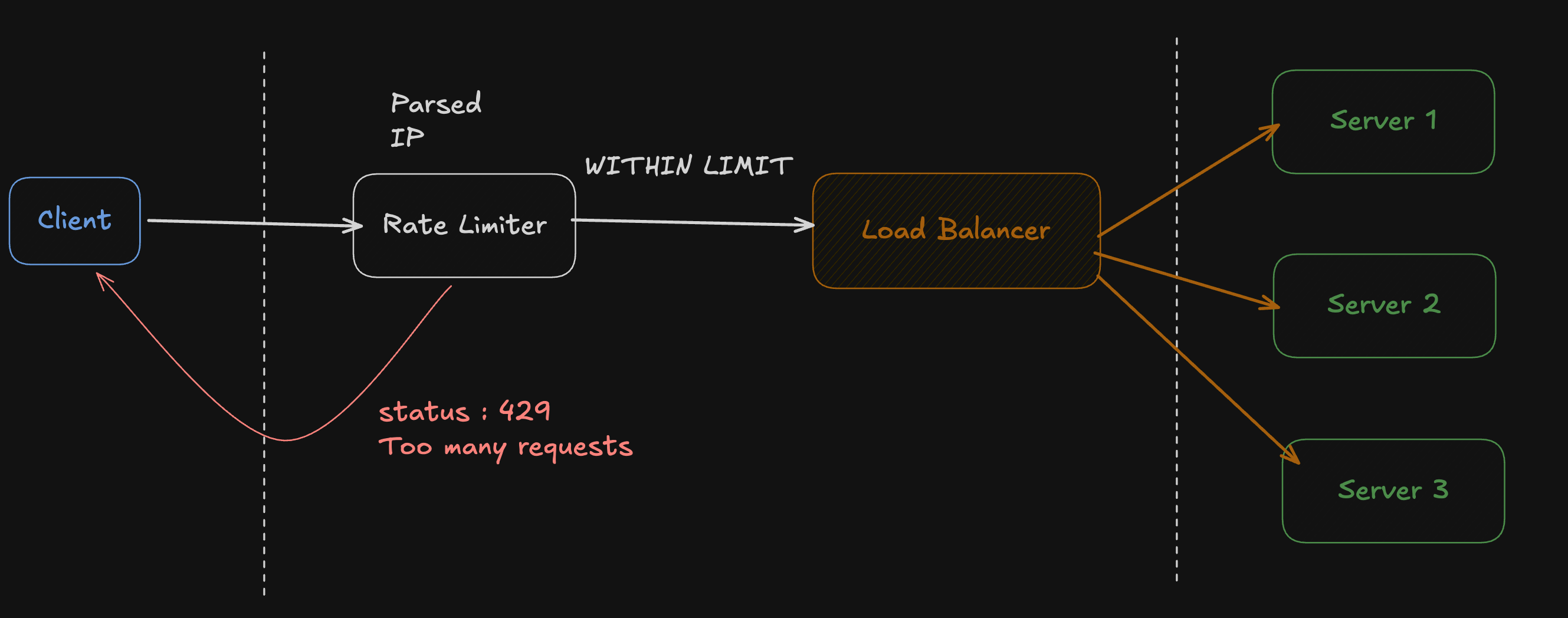

High-Level Architecture

Core Concept: Token Bucket Algorithm

The rate limiter works using a token bucket algorithm where each client is assigned a bucket containing a fixed number of tokens. Every incoming request consumes one token from the bucket. Over time, tokens are replenished at a fixed interval defined by the refill rate. If a request arrives when the bucket has no available tokens, the request is rejected with an HTTP 429 “Too Many Requests” response; otherwise, the request is allowed to proceed to the service.

Important Components

1. Limiter

Manages all client buckets.

- • One bucket per client key (IP)

- • Thread-safe access

- • Responsible for bucket creation and cleanup

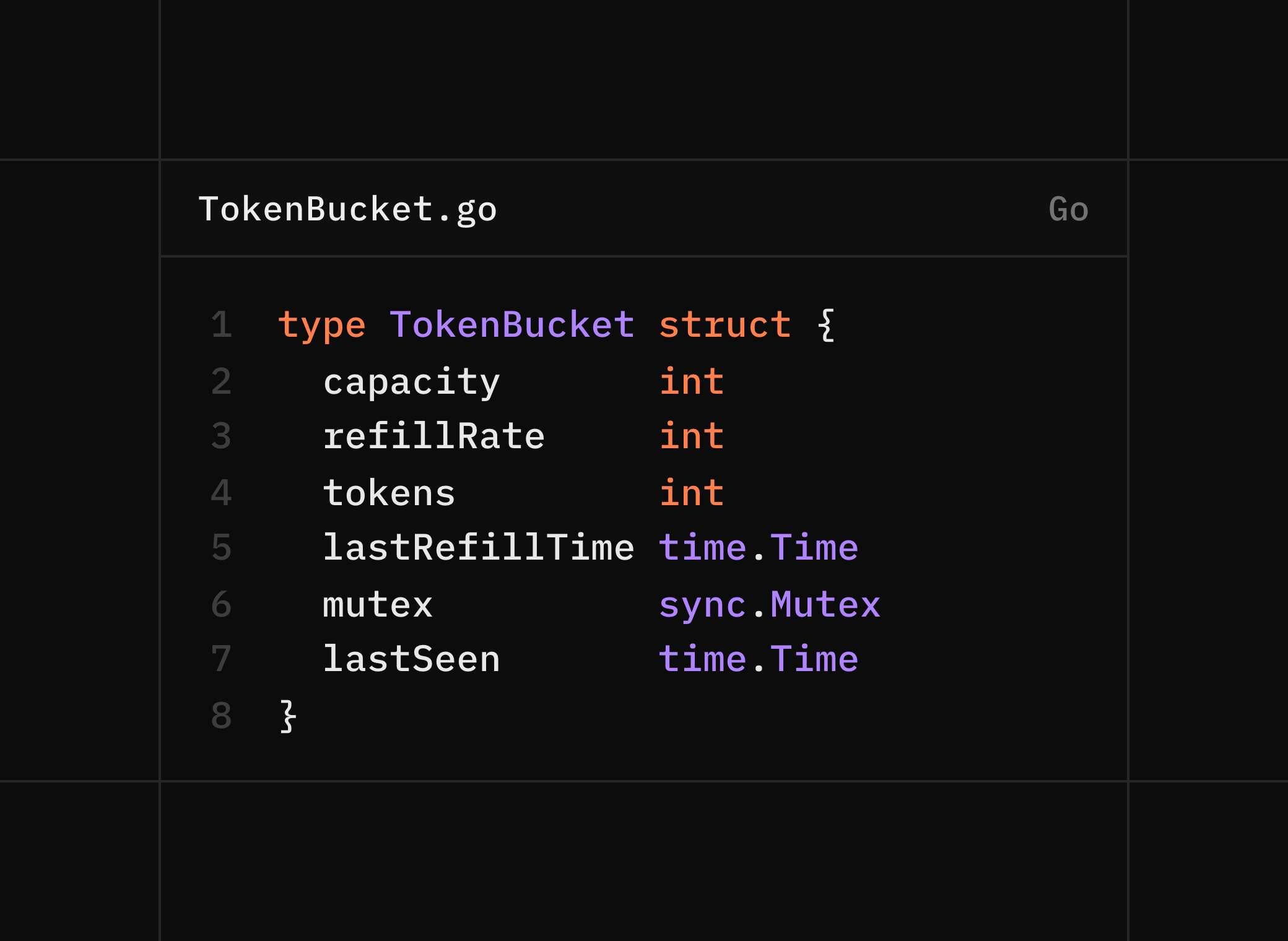

2. TokenBucket

Represents rate limits for a single client.

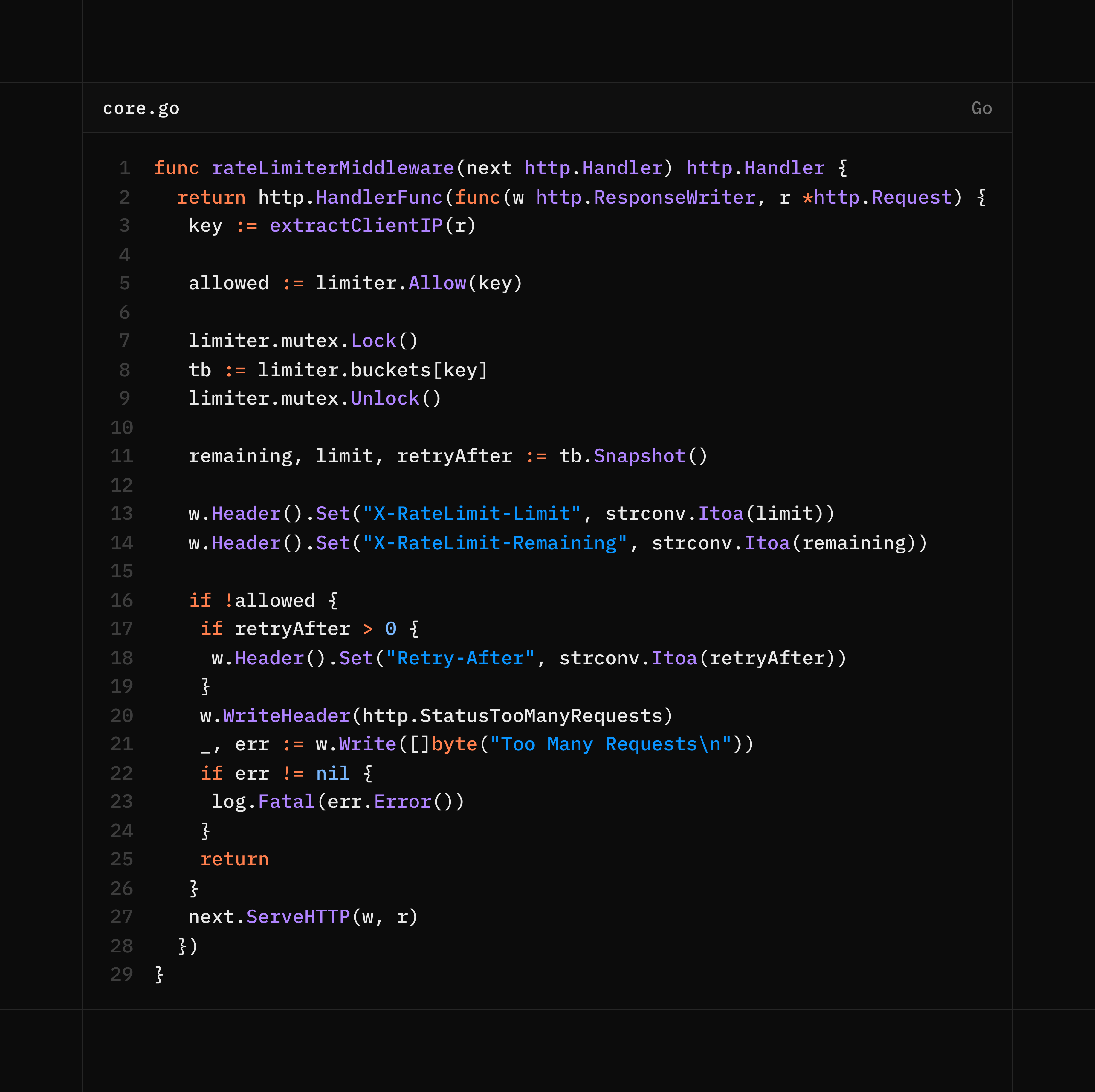

3. Middleware

Applied as HTTP middleware.

Sets headers:

X-RateLimit-Limit,

X-RateLimit-Remaining,

Retry-After

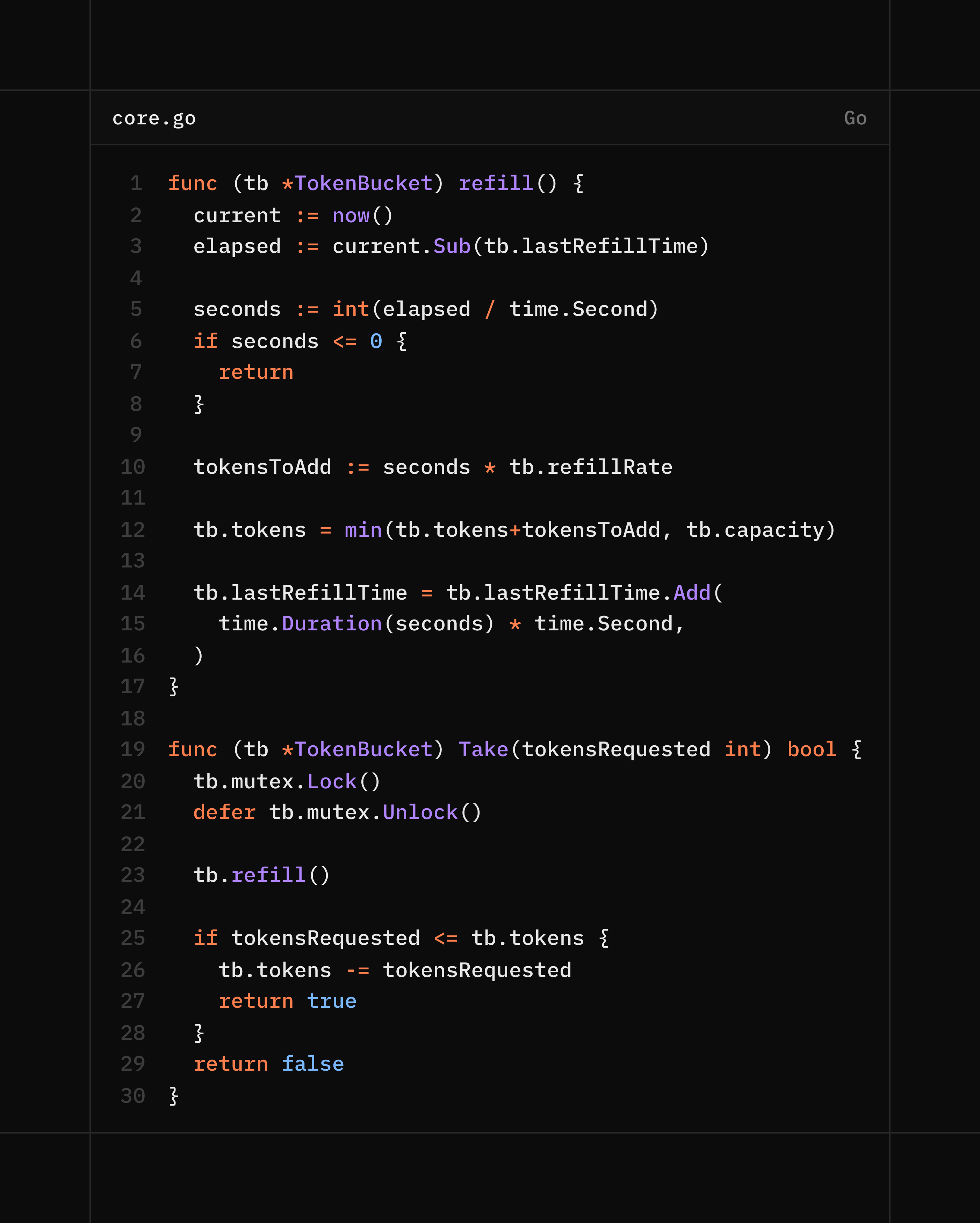

4. Methods

Some methods for Token Bucket Struct

Methods to spend and refill tokens

Refill,

Take,

HTTP Behavior

✓ Allowed Request

HTTP 200 OK

X-RateLimit-Limit: 10

X-RateLimit-Remaining: 7✗ Rate Limited Request

HTTP 429 Too Many Requests

Retry-After: 1

X-RateLimit-Limit: 10

X-RateLimit-Remaining: 0Advantages

- • In-memory and fast

- • Per-client isolation

- • Fine-grained locking

- • Deterministic refill

- • Clean HTTP semantics

Limitations

- • Single-node only

- • Resets on restart

- • IP-based

When to Use This

Never (you have better options 😆)

Final Note

This project is intentionally simple. If you understand this deeply, you understand the foundation of most production rate limiters.